TikTok suggests eating disorder and self-harm content to new teen accounts within minutes, study finds | UK News

A study into TikTok’s video recommendation algorithm has found it suggests eating disorder and self-harm content to some new teen accounts within minutes.

Research from the Centre for Countering Digital Hate (CCDH) saw one account shown suicide content within 2.6 minutes, and another suggested eating disorder content within eight minutes.

Further investigation by Sky News also found evidence of harmful eating disorder content being recommended through TikTok’s suggested searches function, despite not searching for explicitly harmful content.

The UK’s eating disorder charity, BEAT, has said the findings are “extremely alarming” and has called on TikTok to take “urgent action to protect vulnerable users.”

Content warning: this article contains references to eating disorders and self-harm

TikTok’s For You Page offers a stream of videos that are suggested to the user according to the type of content they engage with on the app.

The social media company says recommendations are based on a number of factors, including video likes, follows, shares and device settings like language preference.

More on Data And Forensics

But some have raised concerns about the way this algorithm behaves when it comes to recommending harmful content.

This is one of the videos suggested during the study. The text reads ‘she’s skinnier’ and the music playing over the video says ‘I’ve been starving myself for you’. Pic: Centre for Countering Digital Hate via TikTok

The CCDH set up two new accounts based in the UK, US, Canada and Australia. For each, a traditionally female username was given and the age was set to 13.

Each country’s second account also included the phrase “loseweight” in its username, which separate research has shown to be a trait exhibited by accounts belonging to vulnerable users.

Researchers at CCDH analysed video content shown to each new account’s For You Page over a period of 30 minutes, only interacting with videos related to body image and mental health.

It found that the standard teen users were served videos related to mental health and body image every 39 seconds.

Not all of the content recommended at this rate was harmful, and the study did not differentiate between positive content and negative content.

However, it found that all users were served eating disorder content and suicide content, sometimes very quickly.

CCDH’s research also found that the vulnerable accounts were shown this kind of content three times as much as the standard accounts were, and those accounts were shown more extreme content than standard accounts.

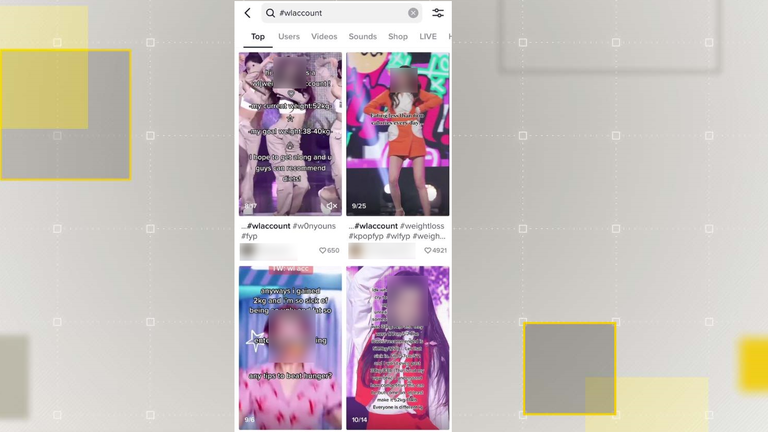

Centre for Countering Digital Hate found 56 hashtags associated with eating disorder content. 35 of those contained a high concentration of pro-eating disorder content. Pic:TikTok

It follows CCDH’s findings that TikTok is host to a community of eating disorder content that has amassed over 13.2 billion views across 56 different hashtags.

Some 59.9 million of those views were on hashtags that contained a high concentration of pro-eating disorder videos.

However, TikTok says that the activity and resulting experience captured in the study “does not reflect behaviour or genuine viewing experiences of real people.”

Eating disorder content is banned on TikTok and it says it regularly removes content that violates it terms and conditions. Pic: REUTERS/Dado Ruvic/File Photo

Kelly Macarthur began suffering from an eating disorder at the age of 14. She’s now recovered from her illness, but as a content creator on TikTok, she has concerns about the impact that some of its content could have on people who are suffering.

“When I was unwell, I thought social media was a really healthy place to be where I could vent about my problems. But in reality, it was full of pro-anorexia material giving you different tips and triggers,” she told Sky News.

“I’m seeing the same thing happen to young people on TikTok.”

Further investigation from Sky News also found harmful eating disorder content being suggested by TikTok in other areas of the app, despite not explicitly searching for it.

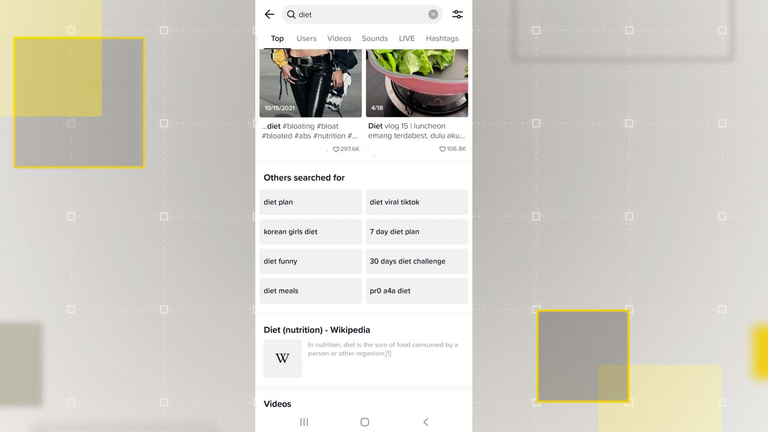

Sky News conducted its own research into TikTok’s recommendation algorithm using several different accounts. But instead of analysing the For You Page, we searched for non-harmful terms like “weight loss” and “diet” in TikTok’s search bar.

Sky News found that searches for terms like ‘diet returns suggested searches associated with eating disorder content. Pic: TikTok

A search for the term “diet” on one account brought up another suggestion “pr0 a4a”.

That is code for “pro ana” which relates to pro-anorexia content.

TikTok’s community guidelines ban eating disorder related content on its platform and this includes prohibiting searches for terms that are explicitly associated with it.

But users will often make subtle edits to terminology that mean they can continue posting about certain issues without being spotted by TikTok’s moderators.

While the term “pro ana” is banned on TikTok, variations of it still appear.

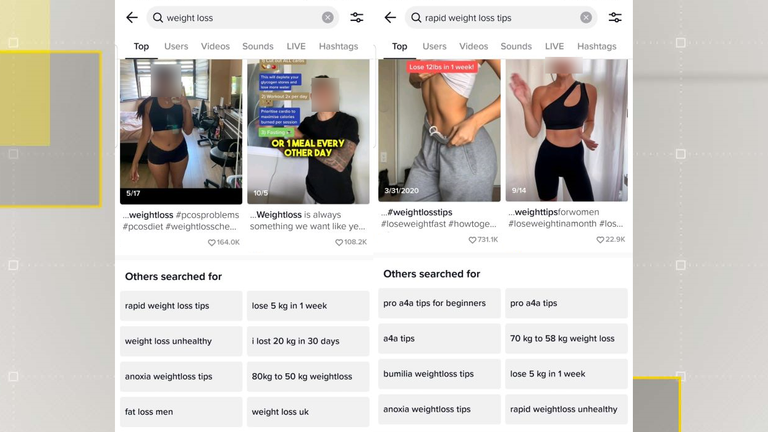

The left screenshot shows the suggested results for the term ‘weight loss’. The right screenshot shows the suggested results when the first suggested result is clicked on. Pic:TikTok

Sky News also found that eating disorder content can be accessed easily through TikTok’s user search function, despite not explicitly searching for it.

A search for the term “weight loss” returns at least one account that appears to be an eating disorder account in its top 10 results.

Sky News reported this to TikTok and it has since been removed.

“It’s alarming that TikTok’s algorithm is actively pushing users towards damaging videos which can have a devastating impact on vulnerable people.” said Tom Quinn, director of external affairs at BEAT.

“TikTok and other social media platforms must urgently take action to protect vulnerable users from harmful content.”

Responding to the findings, a spokesperson for TikTok said: “We regularly consult with health experts, remove violations of our policies, and provide access to supportive resources for anyone in need.

“We’re mindful that triggering content is unique to each individual and remain focused on fostering a safe and comfortable space for everyone, including people who choose to share their recovery journeys or educate others on these important topics.”

The Data and Forensics team is a multi-skilled unit dedicated to providing transparent journalism from Sky News. We gather, analyse and visualise data to tell data-driven stories. We combine traditional reporting skills with advanced analysis of satellite images, social media and other open source information. Through multimedia storytelling we aim to better explain the world while also showing how our journalism is done.

Why data journalism matters to Sky News

Recent Posts

- First Class Holidays acquires African Pride

- BNNP Gerebek Narkoba, Oknum Polisi Diserahkan ke Propam Polda Bali

- HFTP Announces 2025 HITEC North America Advisory Council

- The ten deadliest weather events of the last 20 years and how they were fuelled by climate change | Science, Climate & Tech News

- Pengacara Tom Lembong: Kebijakan Impor Gula saat Kondisi Darurat, Tak Terima Fee

Recent Comments