TikTok launches an in-app US midterms Elections Center, shares plan to fight misinformation – TechCrunch

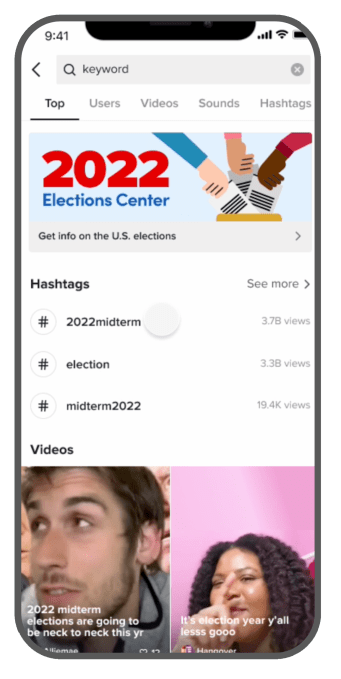

TikTok announced its midterms Elections Center will go live in the app in the U.S. starting today, August 17, 2022, where it will be available to users in more than 40 languages, including English and Spanish.

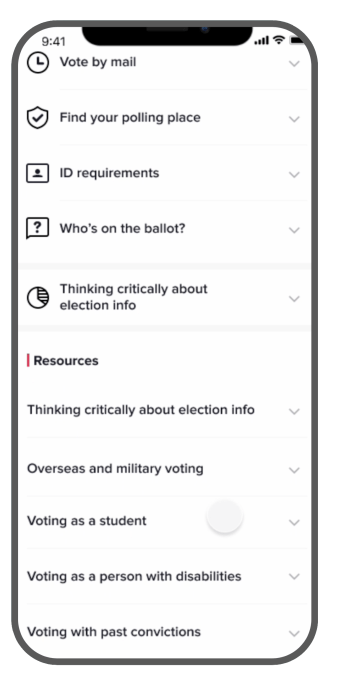

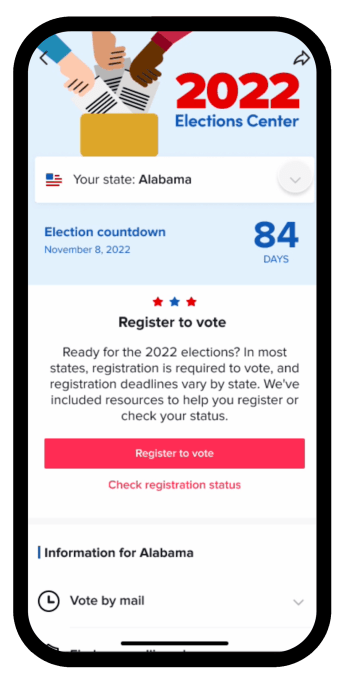

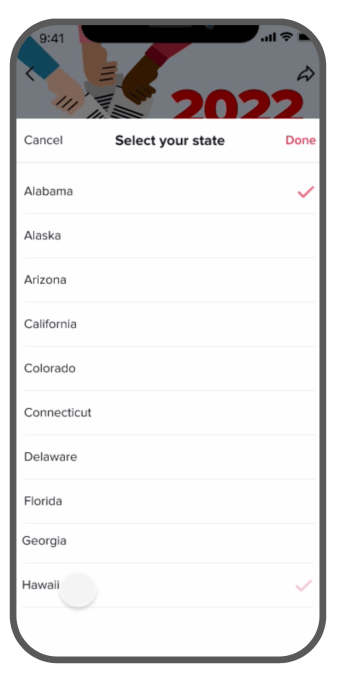

The new feature will allow users to access state-by-state election information, including details on how to register to vote, how to vote by mail, how to find your polling place and more, provided by TikTok partner NASS (the National Association of Secretaries of State). TikTok also newly partnered with Ballotpedia to allow users to see who’s on their ballot, and is working with various voting assistance programs — including the Center for Democracy in Deaf America (for deaf voters), the Federal Voting Assistance Program (overseas voting), the Campus Vote Project (students) and Restore Your Vote (people with past convictions) — to provide content for specific groups. The AP will continue to provide the latest election results in the Elections Center.

The center can be accessed through a number of places inside the TikTok app, including by clicking on content labels found on video, via a banner in the app’s Friends tab, as well as through hashtag and search pages.

Image Credits: TikTok

The company also detailed its broader plan to combat election misinformation on its platform, building on lessons it learned from the 2020 election cycle. For starters, it launched this in-app Election Center six weeks earlier than in 2020. It’s ramping up its efforts to educate the creator community about its rules related to election content, as well. This will include the launch of an educational series on the Creator Portal and TikTok, and briefings with both creators and agencies to further clarify its rules.

Much of how TikTok will address election misinformation has not changed, however.

On the policy side, TikTok says it will monitor for content that violates its guidelines. This includes misinformation about how to vote, harassment of election workers, harmful deep fakes of candidates and incitement to violence. Depending on the violation, TikTok may remove the content or the user’s account, or ban the device. In addition, TikTok may choose to redirect search terms or hashtags to its community guidelines, as it did during the prior election cycle for the hashtags associated with terms like “stop the steal” or “sharpiegate,” among others.

Image Credits: TikTok

The company reiterated its decision to ban political advertising on the platform, which extends not only to ads paid for through its ads platform but also to branded content posted by creators themselves. That means a political action committee could not work around TikTok policies to instead pay a creator to make a TikTok video advocating for their political position, the company claims.

Of course, just important as the policies themselves are TikTok’s ability to enforce them.

The company says it will use a combination of automated technology and Trust and Safety team people to help drive moderation decisions. The former, TikTok admits, can only go so far. Technology can be trained to identify keywords associated with conspiracy theories, that is, but only a human would be able to understand if a video is promoting that conspiracy theory or working to debunk it. (The latter is permitted by TikTok guidelines.)

Image Credits: TikTok

TikTok declined to share how many staffers are dedicated to the job of moderating election misinformation, but noted the larger Trust and Safety team has grown over the past several years. This election, however, will be of increased importance as it follows shortly after TikTok shifted its U.S. user data to Oracle’s cloud and has now tasked the company with auditing its moderation policies and algorithmic recommendation systems.

“As part of Oracle’s work, they will be regularly vetting and validating both our recommendation and our moderation models,” confirmed TikTok’s head of U.S. Safety, Eric Han. “What that means is there’ll be regular audits of our content moderation processes, both from automated systems…technology — and how do we detect and triage certain things — as well as the content that is moderated and reviewed by humans,” he explained.

“This will help us have an extra layer and check to make sure that our decisions highlight what our community guidelines are enforcing and what we want our committee guidelines to do. And obviously, that builds on previous announcements that we’ve talked about in the past in our relationship and partnership with Oracle on data storage for U.S. users,” Han said.

Election content can be triggered for moderation in a number of ways. If the community flags a video in the app, it would be reviewed by TikTok’s teams, who may also work with third-party threat intelligence firms to detect things like coordinated activities and covert operations, like those from foreign powers looking to influence U.S. elections. But a video may also be reviewed if it rises in popularity in order to keep TikTok’s main feed — its For You feed — from spreading false or misleading information. While videos are being evaluated by a fact checker, they are not eligible for recommendation to the For You feed, TikTok notes.

The company says it’s now working with a dozen fact-checking partners worldwide, supporting over 30 languages. Its U.S.-based partners include PolitiFact, Science Feedback and Lead Stories. When these firms determine a video to be false, TikTok says the video will be taken down. If it’s returned as “unverified” — meaning the fact checker can’t make a determination — TikTok will reduce its visibility. Unverified content can’t be promoted to the For You feed and will receive a label that indicates the content could not be verified. If a user tries to share the video, they’ll be shown a pop-up asking them if they’re sure they want to post the video. These sorts of tools have been shown to impact user behavior. TikTok said during tests of its unverified labels in the U.S. that videos saw a 24% decline in sharing rates, for instance.

In addition, all videos related to the elections — including those from politicians, candidates, political parties or government accounts — will be labeled with a link that redirects to the in-app election center. TikTok will also host PSAs on election-related hashtags like #midterms and #elections2022.

Image Credits: TikTok

TikTok symbolizes a new era of social media compared to longtime stalwarts like Facebook and YouTube, but it’s already repeating some of the same mistakes. The short-form social platform wasn’t around during Facebook’s Russian election inference scandal back in 2016, but it isn’t immune from the same concerns about misinformation and disinformation that have plagued more traditional social platforms.

Like other social networks, TikTok relies on a blend of human and automated moderation to detect harmful content at scale — and like its peers it leans too heavily on the latter. TikTok also outlines its content moderation policies in lengthy blog posts, but at times fails to live up to its own lofty promises.

In 2020, a report from watchdog group Media Matters for America found that 11 popular videos promoting false pro-Trump election conspiracies attracted more than 200,000 combined views within a day of the U.S. presidential election. The group noted that the selection of misleading posts was only a “small sample” of election misinformation in wide circulation on the app at the time.

With TikTok gaining popularity and mainstream adoption outside of viral dance videos and the Gen Z early adopters it’s known for, the misinformation problem only stands to worsen. The app has grown at a rapid clip in the last couple of years, hitting three billion downloads by the middle of 2021 with projections that it would pass the 750 million user mark in 2022.

This year, TikTok has emerged as an unlikely but vital source of real-time updates and open source intelligence about the war in Ukraine, taking such a prominent position in the information ecosystem that the White House decided to brief a handful of star creators about the conflict.

But because TikTok is a wholly video-focused app that lacks the searchable text of a Facebook or Twitter post, tracing how misinformation travels on the app is challenging. And like the secretive algorithms that propel hit content on other social networks, TikTok’s ranking system is sealed away in a black box, obscuring the forces that propel some videos to viral heights while others languish.

A researcher studying the Kenyan information ecosystem with the Mozilla Foundation found that TikTok is emerging as an alarming vector of political disinformation in the country. “While more mature platforms like Facebook and Twitter receive the most scrutiny in this regard, TikTok has largely gone under-scrutinized — despite hosting some of the most dramatic disinformation campaigns,” Mozilla Fellow Odanga Madung wrote. He describes a platform “teeming” with misleading claims about Kenya’s general election that could inspire political violence there.

Mozilla researchers had similar concerns in the run-up to 2021’s German federal election, finding that the company dragged its feet to implement fact-checking and failed to detect a number of popular accounts impersonating German politicians.

TikTok may have also played an instrumental role in elevating the son of a dictator to the presidency in the Philippines. Earlier this year, the campaign of Ferdinand “Bongbong” Marcos Jr. flooded the social network with flattering posts and bought influencers to rewrite a brutal family legacy.

Though TikTok and Oracle are now engaged in some sort of auditing agreement, the details of how this will take place are undisclosed, nor to what extent Oracle’s findings will be made public. That means we may not know for some time to what extent TikTok will be able to keep election misinformation under control.

Recent Posts

- Danny Pomanto Sebut Banjir di Makassar Kali Ini Cukup Parah

- Scenic Group reveals new year cruise offers

- Kemenekraf Proyeksikan Tiga Tren Ekonomi Kreatif pada 2025

- HOTLIST 2024 Successfully Concludes Its Official Event Series

- Albania to ban TikTok for a year as PM Edi Rama claims app inciting violence and bullying | World News

Recent Comments